UIs will never be the same (nor will testing them)

Man, what’s gonna happen to UIs is insanely cool

I’m not a believer that chatbots will replace UIs completely. And that’s not because I doubt how crazy good chat interfaces are becoming, it’s because how appealing beautiful UIs are to us humans. GUIs did not kill terminals. But very few use only the command line, because UIs are nice to look at.

But how limited we are by our interfaces!

How come my CRM looks nearly the same the first day I started using it and 6 months in? Why do I have to go through that general-purpose onboarding flow that, let’s face it, I’m gonna skip. It would be amazing if the UI adapted to me, showing me more and more as I get better.

This is the dream of any PM who has worked on onboarding flows. Let’s make it personal. Let’s adapt to user personas. Let’s make the right functionality discoverable at the right moment, then make it recede in the background when the user needs it less.

Unfortunately, it frequently ends with “Let’s build 3 versions of the UI. One for new users. One once they’ve onboarded. Also let’s add that ‘expert mode’ button somewhere to unhide more functionality.” I’m certainly guilty of having done that in the past.

It’s hard to build and maintain a great UI. It’s even harder to maintain 3 versions of a great UI. And it’s near impossible to build a UI that’s personalized to each user.

Enters generative AI. Instead of designing a few onboarding workflows and layouts that map to a handful of user profiles (most often ‘onboarding’ and ‘active’), then “hardcode” them, what if the UI was generated at runtime? What if each user could get a personalized version of the UI based on what it types, and its usage patterns? What if new functionalities popped up as I needed them, unprompted? It seems that this world is becoming possible.

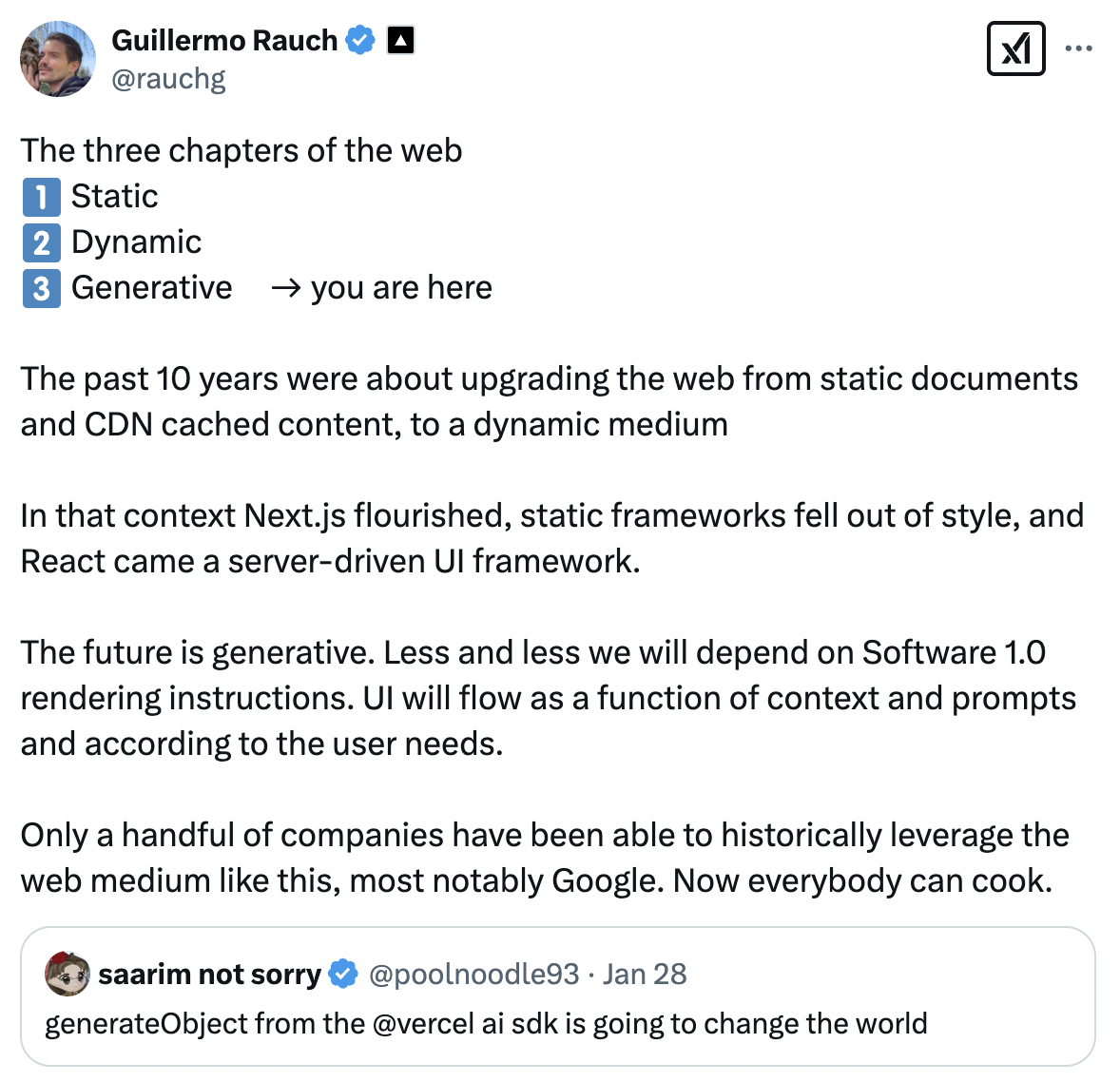

As best said by Vercel’s Guillermo Rauch:

We see early premises of this being baked into frontend architecture (eg. vercel’s generateObject method).

And beyond runtime-generated content, we’ll see runtime-generated interfaces. It’s very likely entire parts of the DOM will be generated for each user, to build an insanely personalized experience.

What it means for testing

Now coming back to testing, interfaces generated at runtime open up a whole new game for testing. Playing back playwright scripts against that type of interface just won’t cut it.

So far, the rigidity of testing frameworks has mostly taken a toll on how painful it was to maintain tests. Change the text of the “submit” button to “Go!” and the testing script breaks. End-to-end tests are massively coupled to front-end implementation. There’s a massive gap between what you would tell a QA tester (check that you can log in, please!) and what your testing script is.

At heal.dev we’ve been set on bridging that gap to make testing more efficient.

But it’s not only about efficiency and test automation being a huge pain. More and more, it will be impossible to automate testing interfaces with legacy approaches. More and more, we’ll want to check that the generated interface supports a user goal under some UX constraints, not that there’s an html element with role=”button” and text ”submit”.

As UIs become more adaptive, automated testing will need to (finally!) focus on user scenarios, not on selectors.

And that’s why the testing industry will need to adapt fast and build human-level QA that runs at the speed of automation. This is what we’re doing at heal!

If that resonates, get in touch! I love to discuss UI and testing (and learn more about cool personalized user onboarding projects that did work!)

Acknowledgements

Hat tip and heartfelt thanks to Murat Sutunc and Ryan Philips for inspiring this article.